I got a good reader question today about incrementing variables. This reader is completely new to programming and was getting confused about two common ways to increment variables:

count = count + 1;

a statement that often appears in a while loop, and

i++;

a statement that often appears in a for loop, like this:

for (var i = 0; i < 10; i++) {

...

}

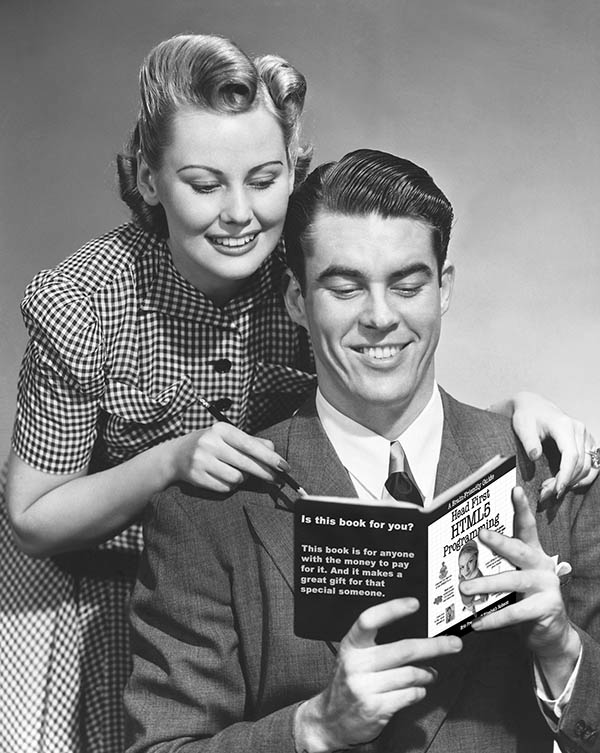

While this question is about JavaScript, these lines of code could appear identically (or almost identically) in many languages! In Head First HTML5 Programming, we speed through these language basics pretty quickly, so it’s understandable that someone new to programming might be a bit confused on this point. Plus, and I hate to admit this, I think we completely forgot to explain what operators ++ and -- actually do! (We’ll fix that on the next printing!)

The trick to understanding the first one is to remember that when you use =, you are assigning a value to a variable. You’re not comparing two values; you do that with the comparison operator, which is ==.

So, when you write

count = count + 1;

you are saying: “Take the current value of the variable count, add 1 to it, and then change the value of count to that new value.”

So if count is equal to 4, and you run this line of code, after you run the code, the value of count will be 5.

Adding 1 to the value of a variable is something we do so often in programming that language designers have added shortcuts to make it even easier to add 1 to a variable with a number value. One shortcut used in many languages is the ++ operator. Writing

i++;

is the same as writing

i = i + 1;

It’s just a shortcut to do the same thing. So, when you see a for loop like this:

for (var i = 0; i < 10; i++) {

...

}

there are three things happening in that for loop: the first part, var i = 0;, declares and initializes the the variable i to the value 0. Then the conditional expression is run: i < 10. This says, is i less than 10? Well, if we just started the loop it is less than 10, in fact it’s 0. In that case, all the statements in the body of the for loop are run (that is, everything between the { and the }). Finally, the value of i is incremented with i++, making the new value of i equal to 1. Then you start over, except you skip the initialization bit. So you go right to the conditional expression: is i still less than 10? Yes, it’s 1. So run all the statements in the body of the for loop again. And so on. When i is incremented to 10, then the for loop stops because the conditional expression is no longer true.

An analogous operator to ++ is -- which subtracts 1 from the value of a variable. In other words, writing

i--;

is the same as writing

i = i - 1;

I hope that helps clear up that reader question. Keeping send me your questions if you run into any problems. Thank you!

I don’t know much about Javascript, but in a widely used language like C the increment operator and the addition operator are not the same and what they do depends on the hardware platform you are compiling for.

Dave, you are right! In C, increment can be used for pointers which produces a completely different result. In JavaScript, there are no pointers, only references to objects, and here, we’re talking only about integer arithmetic. There is also the issue of whether the increment happens before or after another operation, but in these simple examples (above) the increment happens to work in the same way as the addition operator. And finally, there is the issue of the size of the number you are incrementing. For the most part in JavaScript, we aren’t dealing with very large or small numbers, and in these examples, with integers (rather than floating point), increment produces the same result as addition. Definitely more depth to this issue, but for purposes of understanding the book, this is good enough!